Get the Insights of Your Neural Network.

innsight - Get the insights of your neural network

Table of contents

Introduction

innsight is an R package that interprets the behavior and explains individual predictions of modern neural networks. Many methods for explaining individual predictions already exist, but hardly any of them are implemented or available in R. Most of these so-called feature attribution methods are only implemented in Python and thus difficult to access or use for the R community. In this sense, the package innsight provides a common interface for various methods for the interpretability of neural networks and can therefore be considered as an R analogue to iNNvestigate or Captum for Python.

This package implements several model-specific interpretability (feature attribution) methods based on neural networks in R, e.g.,

- Layer-wise Relevance Propagation (LRP)

- Including propagation rules: $\varepsilon$-rule and $\alpha$-$\beta$-rule

- Deep Learning Important Features (DeepLift)

- Including propagation rules for non-linearities: Rescale rule and RevealCancel rule

- DeepSHAP

- Gradient-based methods:

- Vanilla Gradient, including Gradient x Input

- Smoothed gradients (SmoothGrad), including SmoothGrad x Input

- Integrated gradients

- Expected gradients

- Connection Weights

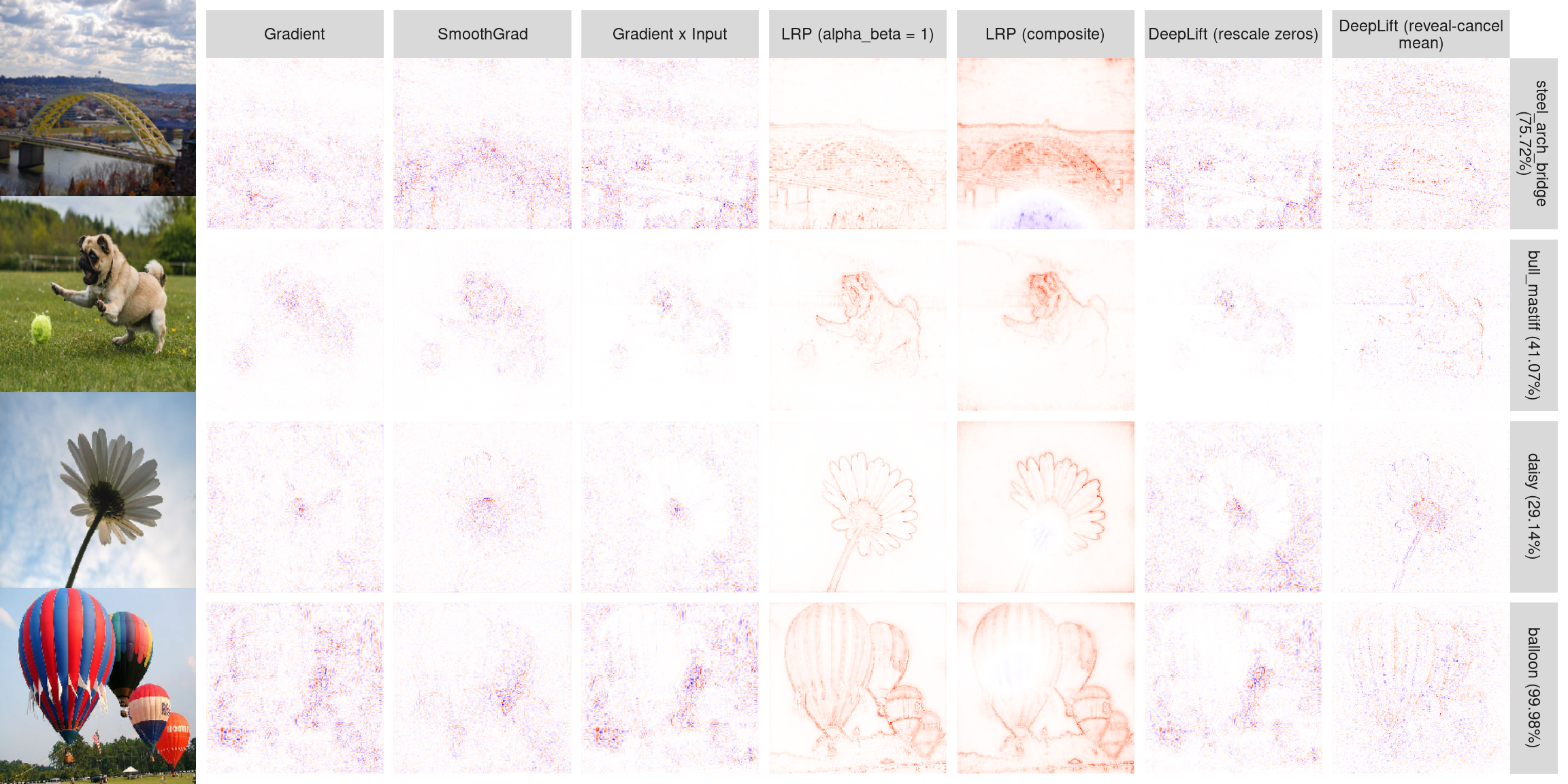

Example results for these methods on ImageNet with pretrained network VGG19 (see Example 3: ImageNet with keras for details):

The package innsight aims to be as flexible as possible and independent of a specific deep learning package in which the passed network has been learned. Basically, a neural network of the libraries torch, keras and neuralnet can be passed, which is internally converted into a torch model with special insights needed for interpretation. But it is also possible to pass an arbitrary net in form of a named list (see vignette for details).

Installation

The package can be installed directly from CRAN and the development version from GitHub with the following commands (successful installation of devtools is required)

# Stable version

install.packages("innsight")

# Development version

devtools::install_github("bips-hb/innsight")

Internally, any passed model is converted to a torch model, thus the correct functionality of this package relies on a complete and correct installation of torch. For this reason, the following command must be run manually to install the missing libraries LibTorch and LibLantern:

torch::install_torch()

📝 Note

Currently this can lead to problems under Windows if the Visual Studio runtime is not pre-installed. See the issue on GitHub here or for more information and other problems with installing torch see the official installation vignette of torch.

Usage

You have a trained neural network model and your model input data data. Now you want to interpret individual data points or the overall behavior by using the methods from the package innsight, then stick to the following pseudo code:

# --------------- Step 0: Train your model -----------------

# 'model' has to be an instance of either torch::nn_sequential,

# keras::keras_model_sequential, keras::keras_model or neuralnet::neuralnet

model = ...

# -------------- Step 1: Convert your model ----------------

# For keras and neuralnet

converter <- convert(model)

# For a torch model the argument 'input_dim' is required

converter <- convert(model, input_dim = model_input_dim)

# -------------- Step 2: Apply method ----------------------

# Apply global method

result <- run_method(converter) # no data argument is needed

# Apply local methods

result <- run_method(converter, data)

# -------------- Step 3: Get and plot results --------------

# Get the results as an array

res <- get_result(result)

# Plot individual results

plot(result)

# Plot a aggregated plot of all given data points in argument 'data'

plot_global(result)

boxplot(result) # alias of `plot_global` for tabular and signal data

# Interactive plots can also be created for both methods

plot(result, as_plotly = TRUE)

For a more detailed high-level introduction, see the introduction vignette, and for a full in-depth explanation with all the possibilities, see the “In-depth explanation” vignette.

Examples

- Iris dataset with torch model (numeric tabular data) → vignette

- Penguin dataset with torch model and trained with luz (numeric and categorical tabular data) → vignette

- ImageNet dataset with pre-trained models in keras (image data) → article

Contributing and future work

If you would like to contribute, please open an issue or submit a pull request.

This package becomes even more alive and valuable if people are using it for their analyses. Therefore, don’t hesitate to write me ([email protected]) or create a feature request if you are missing something for your analyses or have great ideas for extending this package. Currently, we are working on the following:

- [ ] GPU support

- [ ] More methods, e.g. Grad-CAM, etc.

- [ ] More examples and documentation (contact me if you have a non-trivial application for me)

Citation

If you use this package in your research, please cite it as follows:

@Article{,

title = {Interpreting Deep Neural Networks with the Package {innsight}},

author = {Niklas Koenen and Marvin N. Wright},

journal = {Journal of Statistical Software},

year = {2024},

volume = {111},

number = {8},

pages = {1--52},

doi = {10.18637/jss.v111.i08},

}

Funding

This work is funded by the German Research Foundation (DFG) in the context of the Emmy Noether Grant 437611051.